Speed up your data team with ELT instead of ETL

Share

How long does it take to create an absolutely new report based on new data with some data transformations? Is it possible to reuse the same views and tables for a new report? If yes - how long will take to update some view or table with just one additional column?

The answers

More than one or two days? That’s unacceptable. Looks like you’re still using the ETL process for your data. So let’s dive deeper to understand the problem and why you should definitely switch to the ELT pipeline.

The classic way for data pipelines was Extract → Transform → Load.

The first step is just uploading data. The second step is to prepare your data, reshape it, clean it, and adjust it with additional metadata. After these steps, load the prepared data into your data warehouse.

Who works on the second step and what exactly do they do?

- Data analysts should prepare a detailed description of all transformations, columns, and formats that they need.

- Then data engineers write a code, deploy it to the stage or QA environment.

- The data analyst will make sure everything is done correctly.

- Devops will deploy code to production.

It will take a few days, maybe one week. Seems easy, right? But…

Your stakeholder asked you about one more detail and if you don’t have the right data - now you’re back to step 1 and doing one more cycle of the same job.

Just imagine how many cycles you will do with the new ad-hoc requests.

That was an interesting adventure but we don’t prefer this method and usually want results in a shorter period of time. This is where ELT comes into the game.

Remember: disk space is cheap, people time is expensive

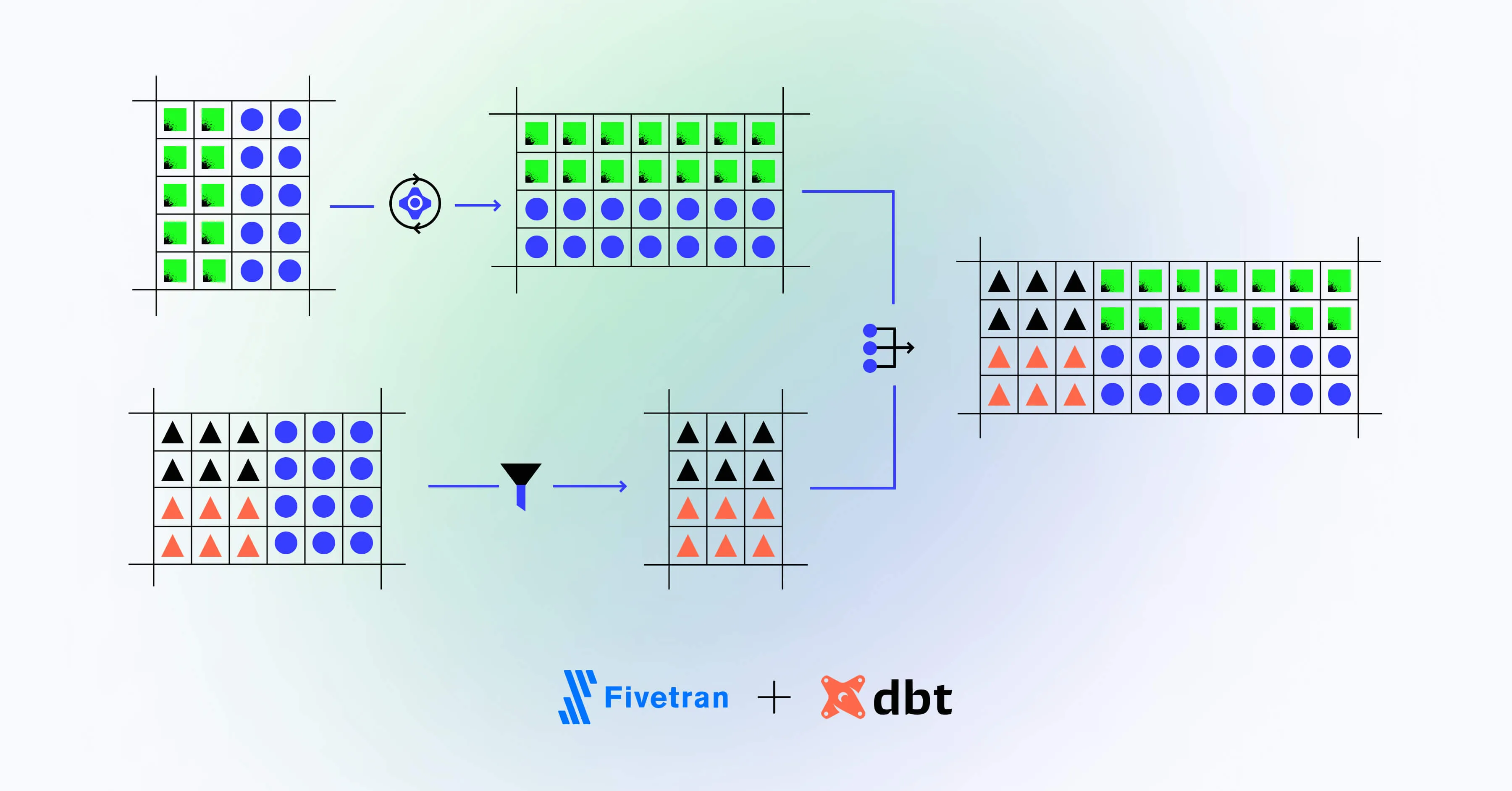

Your new pipeline: Extract → Load → Transform

Everything was invented before us. Hevo data, Luigi, Blendo, Matilion, Talend, StreamSets, Etleap, Airflow, Kafka, Data form, dbt, holistics - tools which can help you a lot, so try these and remember you don’t solely have to use SQL. So that’s the flow:

- Data engineers should just upload all raw data into your DWH.

- Data analysts will create models (view or tables, as you wish) just with SQL and deploy them with one command.

So now data engineers no longer need discussions with analysts about requirements and column names - they just write a code for the data pipeline.

Analysts decide what to include into models and update them if needed. This is all done without data engineers, DevOps, or writing new requirements. Just SQL and the command line.

We’re using DBT for data modeling, data quality check, and documentation. Each step is covered by an analyst who creates or updates the data model:

- create a data model and deploy it

- add tests and documentation in schema.yaml file

Also, it’s easy to deploy your data documentation as a static website inside your company.

Let’s summarize the benefits:

- much lower time to respond to ad-hoc requests

- analysts manage their flow themselves

- tools that allow your data models to be easily covered by tests

- data documentation is accessible and always up to date

Using the wrong ways you lose your money and the possibilities to grow. Only 31% of companies identify themselves as being data-driven. Are you one of them?

Our team is ready to help you with data orchestration using the best practices. Improve your data flow and become a real data-driven company.

How long does it take to create an absolutely new report based on new data with some data transformations? Is it possible to reuse the same views and tables for a new report? If yes - how long will take to update some view or table with just one additional column?